I am currently a PhD student at Stanford, studying optimization and machine learning with Stephen Boyd, but from 2017 through 2018 I was a software engineer on the Google Brain team. I started three months after receiving a Master’s degree in computer science (also from Stanford), having just spent the summer working on a research project—a domain-specific language for convex optimization. At the time, a part of me wanted to continue working with my advisor, but another part of me was deeply curious about Google Brain. To see a famous artificial intelligence (AI) research lab from the inside would, at the very least, make for an interesting anthropological experience. So, I joined Google. My assignment was to work on TensorFlow, an open-source software library for deep learning.

Brain was a magnet for Google’s celebrity employees. For the past few years, Google’s CEO Sundar Pichai (who believes AI is “more profound than electricity or fire”) has emphasized that Google is an “AI-first” company, with the company seeking to implement machine learning in nearly everything do. In a single afternoon, in the team’s kitchenette, I saw Pichai, co-founder Sergey Brin, and Turing award winners David Patterson and John Hennessey.

I didn’t work with these celebrity employees, but I did get to work with some of the original TensorFlow developers. These developers gave me guidance when I sought it and habitually gave me more credit than I deserved. For example, my coworkers let me take the lead in writing an academic paper about TensorFlow 2, even though my contributions to the technology were smaller than theirs. The unreasonable amount of trust placed in me, and credit given to me, made me work harder than I would have otherwise.

The culture of Google Brain reminded me of what I’ve read about Xerox PARC. During the 1970s, researchers at PARC paved the way for the personal computing revolution by developing graphical user interfaces and producing one of the earliest incarnations of a desktop computer.

PARC’s culture is documented in The Power of the Context, an essay written by PARC researcher Alan Kay. Kay describes PARC as a place where senior employees treated less experienced ones as “world-class researchers who just haven’t earned their PhDs yet” (similar to how my coworkers treated me). Kay goes on to say that researchers at PARC were self-motivated and capable “artists,” working independently or in small teams towards similar visions. This made for a productive environment that at times felt “out of control”:

A great vision acts like a magnetic field from the future that aligns all the little iron particle artists to point to “North” without having to see it. They then make their own paths to the future. Xerox often was shocked at the PARC process and declared it out of control, but they didn’t understand that the context was so powerful and compelling and the good will so abundant, that the artists worked happily at their version of the vision. The results were an enormous collection of breakthroughs, some of which we are celebrating today.

At Brain, as at PARC, researchers and engineers had an incredible amount of autonomy. They had bosses, to be sure, but they had an a lot of leeway in choosing what to work on — in finding “their own paths to the future.” (I say “had”, not “have”, since I’m not sure whether Brain’s culture has changed since I’ve left.)

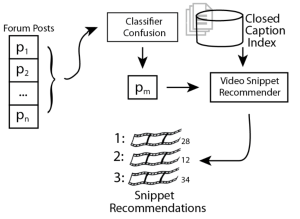

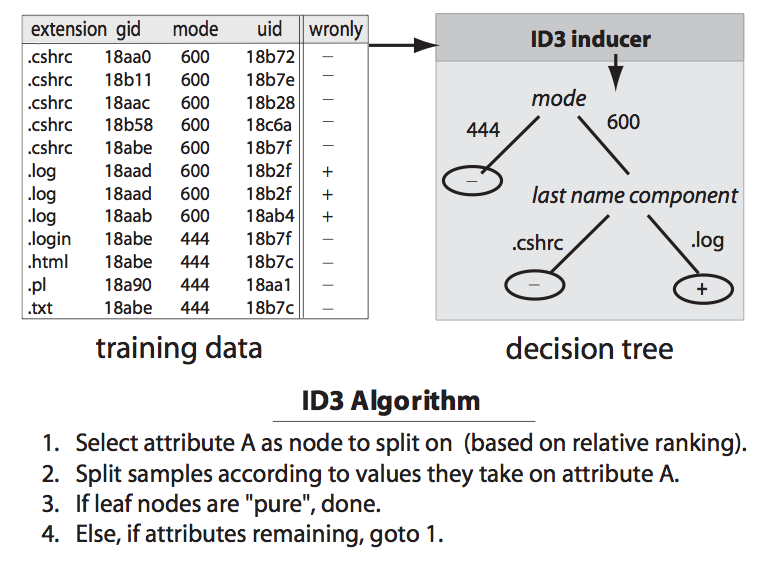

I’ll give one example: a few years ago, many on the Google Brain team realized that machine learning tools were closer to programming languages than to libraries, and that redesigning their tools with this fact in mind would unlock greater productivity. Management didn’t command engineers to work on a particular solution to this problem. Instead, several small teams formed organically, each approaching the problem in its own way. TensorFlow 2.0, Swift for TensorFlow, JAX, Dex, Tangent, Autograph, and MLIR were all different angles on the same vision. Some were in direct tension with each other, but each was improved by the existence of the other—we shared notes often, and re-used each other’s solutions when possible. It’s totally possible that many of these tools might not become anything more than promising experiments, but it’s also possible that at least one will be a breakthrough.

I would guess that the PARC-like context that Brain operated in was instrumental in bringing about the creation of TensorFlow. In late 2015, Google open-sourced TensorFlow, making it freely available to the entire world. TensorFlow quickly became enormously popular. Instructors at Stanford and other universities used it in their curricula (my friend Chip Huyen, for example, created a Stanford course called TensorFlow for Deep Learning Research), researchers across the world used it to run experiments, and companies used it to train and deploy models in the real world. Today, TensorFlow is the fifth most popular project on Github out of the many millions of public software repositories available on it, as measured by star count.

And yet, at least for TensorFlow, Google Brain’s hyper-creative, hyper-productive, and “out of control” culture was a double-edged sword. In the process of making their own paths to a shared future, TensorFlow engineers released many features sharing a similar purpose. Many of these features were subsequently deemphasized in favor of more promising ones. While this process might have selected for good features (like tf.data and eager execution), it frustrated and exhausted our users, who struggled to keep up.

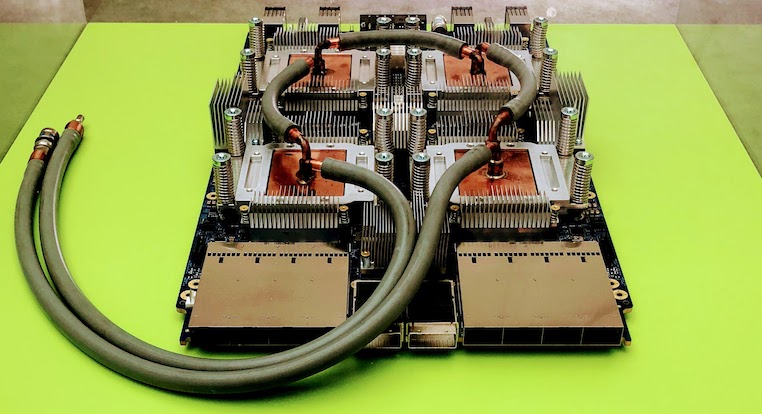

Brain differed from PARC in at least one way: unlike PARC, which infamously failed to commercialize its research, Google productionized projects that were incubated in Brain. Examples include Google Translate, the BERT language model (which informs Google search), TPUs (hardware accelerators that Google rents to external clients, and uses internally for a variety of production projects), and Google Cloud AI (which sells AutoML as a service). In this sense Google Brain was a natural extension of Larry Page’s desire to work with people who want to do “crazy world-breaking things” while having “one foot in industry” (as Page stated in an interview with Walter Isaacson.)

Leaving Google Brain for a PhD was difficult. I had grown accustomed to the perks, and I appreciated the team’s proximity to research. Most of all I loved working alongside a large team on TensorFlow 2.0—I’m passionate about building better tools, for better minds. But I also love the creative expression that research provides.

I’m often asked why I didn’t simply involve myself in research at Brain, instead of enrolling in a PhD program. Here’s why: the zeitgeist had little room for topics other than deep learning and reinforcement learning. Indeed, in 2018, Google rebranded “Google Research” to “Google AI,” redirecting research.google.com to ai.google.com. (The rebranding understandably raised some eyebrows. It appears that change was quietly rolled back sometime recently, and the Google Research brand has been resurrected.) While I’m interested in machine learning, I’m not convinced that today’s AI is anywhere near as profound as electricity or fire, and I wanted to be trained in a more intellectually diverse environment.

In fact, most of my mentors at Brain encouraged me to enroll in the PhD program. Only one researcher strongly discouraged me from pursuing a PhD, comparing the experience to “psychological torture.” I was so shocked by his dark warning that I didn’t ask any follow-up questions, he didn’t elaborate, and our meeting ended shortly afterwards.

These days, in addition to machine learning, I’m interested in in convex optimization, a branch of computational mathematics concerned with making optimal choices. Convex optimization has many real-world applications—SpaceX uses it to land rockets, self-driving cars use it to track trajectories, financial companies use it to design investment portfolios, and, yes, machine learning engineers use it to train models. While well-studied, as a technology, convex optimization is still young and niche. I suspect that convex optimization has the potential to become a powerful, widely-used technology. I’m interested in doing the work—a bit of math and a bit of computer science—to realize its potential. My advisor at Stanford, Stephen Boyd, is perhaps the world’s leading expert on applications of convex optimization, and I simply could not pass up an opportunity to do useful research under his guidance.

It’s been just over a year since I left Google and started my PhD. Since then, I’ve collaborated with my lab to publish several papers, including one that makes it possible to automatically learn the structure of convex optimization problems, bridging the gap between convex optimization and deep learning. I’m now one of three core developers of CVXPY, an open-source library for convex optimization, and I have total creative control over my research and engineering projects.

There are many things about Google Brain that I miss, my coworkers most of all. But now, at Stanford, I get to collaborate with and learn from an intellectually diverse group of extremely smart and passionate individuals, ranging from pure mathematicians, electrical and chemical engineers, physicists, biologists, and computer scientists.

I’m not sure what I’ll do once I graduate, but for now, I’m having a lot of fun—and learning a ton—doing a bit of math, writing papers, shipping real software, and exploring several lines of research in parallel. If I’m very lucky, one of them might even be a breakthrough.